Introduction to GANs

Gentle introduction to GANs.

written by Gregorio Nuevo Castro ― gnuevo

Introduction to GANs

GANs are a type of generative models. That means that they try to learn the probability distribution of a dataset so they can can create new instances that look like the ones in the dataset. They are formed by two neural networks that work together to learn the distribution of the data:

- Discriminator, and

- Generator

The Generator tries to generate fake samples that are indistinguishable from real samples. Suppose we want to create novel pictures of cats (for some extrange reason we arrived at the conclusion that there is a shortage of cat pictures on the Internet, so we want to create a GAN that can generate new pictures of cats). In this case our Generator will try to create fake pictures of cats that are indistinguishable from pictures of real cats. On the other hand, the Discriminator will learn to distinguish between real images of cats (coming from the dataset) and fake images of cats (created by the Generator).

At the beginning, the Generator doesn’t know anything about how to generate images of cats so it basically outputs noise. At every iteration, the Discriminator learns to distinguish what is real and what is fake, and it tells the Generator what it’s doing wrong. You can think of it as the Discriminator saying things like I cought you because you draw a cat with only one ear or the eyes of the cat should be arranged horizontally, not vertically. Of course, this is just an explanation, reality involves communication through backpropagation of gradients.

I think we can get a better idea if we see an example.

Gaussian GAN

Let’s build a GAN to approximate a Gaussian distribution. You’re probably familiar with how a Gaussian distribution looks like. They are very common, and they look like the following image

To implement the GAN we need four elements:

- A target distribution, in this case a Gaussian distribution with a given

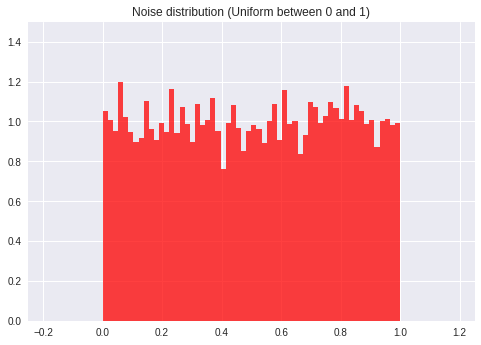

meanandstd. - A noise sampler, this is going to be the input to our Generator. It learns to convert random noise into meaningful samples.

- Our Discriminator, and

- Our Generator.

Our idea in this tutorial is to input Uniform noise to the Generator and get samples from a Gaussian distribution at the output. Something like what is shown in the picture.

At the end of the training you shoudl see something like the picture

The green plot represents the training that (i.e. the Gaussian that we want to approximate). The blue plot is what our Generator does. For the correct parameters and enough training, it can get a fair representation of the target distribution.

Anyway, it’s better if you check the code and go through it yourself. It’s very simple and it’s totally guided so you won’t have problems. Simple Gaussian GAN code